Contents

Introduction

This blog consists of two parts. The first part highlights the business aspect that lays the foundation for ‘Azure Stack’. The ‘second part‘ introduces ‘Azure Stack’ as a technical product; briefly explaining all functionalities and scenarios. I highly recommend reading this part first to really understand the product’s full potential.

If you do not have time to read the whole blog, then head over to the ‘Conclusions‘ section.

My goal for this blog was to explain why Microsoft, and even Linux orientated IT organizations, need ‘Azure Stack’ in the data center. As a technical person, I can tell you everything that is great about ‘Azure Stack’, but that would only cover the technical aspect of it. It did not feel complete enough, especially when you want to make non-technical people aware of its full potential, and why it is important for cloud businesses to start as soon as possible. As with every product, you have the business aspect behind it, which can’t be left underexposed; certainly not in a new cloud era, fueled by the ‘Software-Defined Data Center’ (SDDC) and containerized ‘Platform as a Service (PaaS) offerings. A second cloud era, in which applications are re-invented.

My goal for this blog was to explain why Microsoft, and even Linux orientated IT organizations, need ‘Azure Stack’ in the data center. As a technical person, I can tell you everything that is great about ‘Azure Stack’, but that would only cover the technical aspect of it. It did not feel complete enough, especially when you want to make non-technical people aware of its full potential, and why it is important for cloud businesses to start as soon as possible. As with every product, you have the business aspect behind it, which can’t be left underexposed; certainly not in a new cloud era, fueled by the ‘Software-Defined Data Center’ (SDDC) and containerized ‘Platform as a Service (PaaS) offerings. A second cloud era, in which applications are re-invented.

Not only does the ‘Azure Stack’ slogan, “Azure brought to your data center”, sound incredible, but the whole concept behind it too! It is an entirely new way of doing business in the cloud. Opening up a whole new ball game, changing clouds dramatically, and the way companies are going to invest in IT. After some extensive research, things were getting clear enough for me to write this blog post, and share my thoughts and findings with you. It is not easy getting the complete cloud landscape picture clear, each time scratching another surface. Not only because of all the time needed researching into things and its enormous vastness, but also because of rapid developments happening every day. So please don’t sue me if you find any incompleteness or inaccurate, outdated information :) This blog is solely here to share my vision with substantiated information about the cloud revolution happening today. I hope it inspires you enough to research or play with these exciting technologies yourself. Perhaps, also visualizing what this revolution could mean for your business and for you as a professional.

Business value

Cloud and application landscape

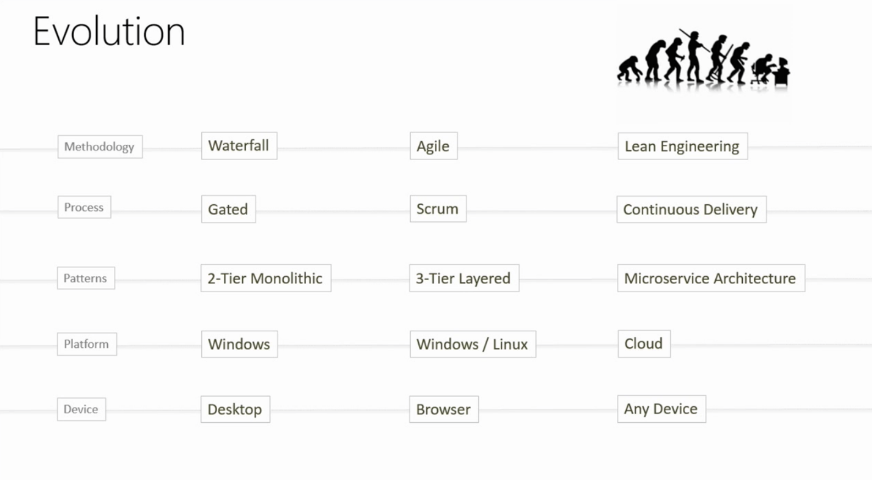

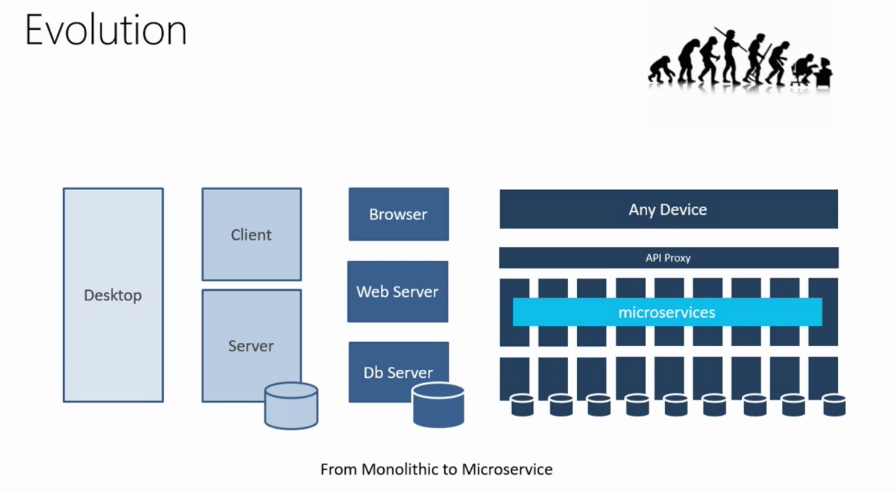

The application landscape is changing rapidly with endless new cloud-hosted possibilities. As a result, more and more traditional applications are transformed into cloud-native ones. This means that businesses, and in particular ‘independent software vendors’ (ISV’s), are utilizing new technology, which their developers have adopted to simplify application compatibility, development, and, more importantly, deployment. These innovations fuel the consistent streamlined application delivery model, where deploying, testing, and bringing an application to production is a matter of hours instead of weeks or even months.

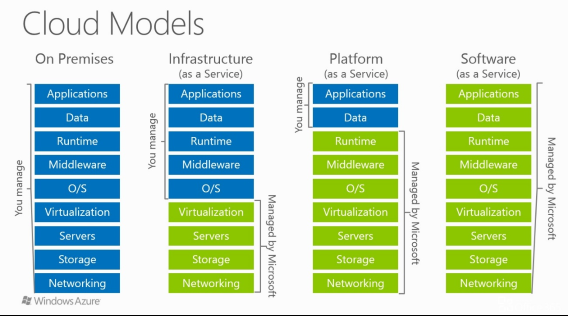

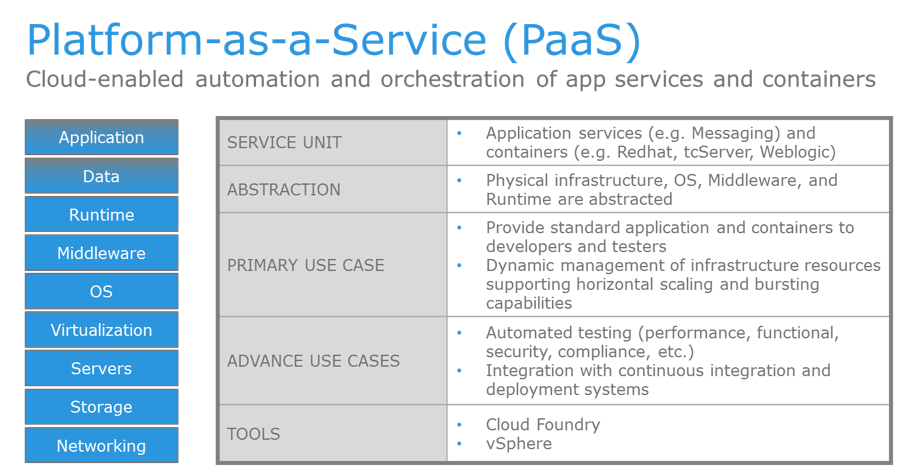

Making use of these new cloud application deployment technologies, in combination with agile DevOps processes supporting a rapid delivery model, provides enormous efficiency and flexibility. More and more traditional (monolithic) client-server applications are turning into cloud service compatible ones. They can be managed by the IT admin as an ISV specific ‘Software as a Service’ (SaaS) application, or developer deployed as a custom ‘Platform as a Service’ (PaaS) application in the public or hosted cloud. These ‘PaaS’ services offer various development ‘application programming interfaces’ (API’s), and highly automated resource orchestration managers. They provision and manage all resources needed by the application, completely taking care of the underlying infrastructure.

By easily deploying applications to a service into the public or hosted cloud, companies do not have to rely on their traditional complex infrastructure. This infrastructure would otherwise be used to host their monolithic client-server application on, either on older physical infrastructure on-premise or at a cloud service provider using ‘Infrastructure as a Service’ (IaaS). Where deploying an application, with all of its supporting tier layered systems containing compute, network, security, and storage, could take ages, updating one system results in updating every system dependent on that system, and thus in updating the whole tier. Scaling a system, due to its becoming a bottleneck, results in scaling the rest of the tier with it. Scripting and manual configuration are standard practice in these environments and are used for scaling, maintaining, and deploying systems, thus making any further consistent deployments completely non-reproducible.

The complexity involved for just one single application makes it already seem like an outdated and very expensive approach. Expenses are made in hardware, support, maintenance, and qualified IT Pro’s managing the infrastructure, as well as in the supporting staff servicing and supervising the IT (infrastructure) solution; they guarantee the quality and availability of the product to customers or stakeholders.

Still, a large portion of these traditional monolithic applications are hosted on complex infrastructure in on-premise environments and private clouds at cloud service providers. Everything is maintained by an IT workforce with a potentially high staff turnover. Sounds scary, right? However, it is also very costly and, in the end, that is what it is all about – costly operations that don’t add value anymore, certainly not in the new cloud landscape of today.

Happening now

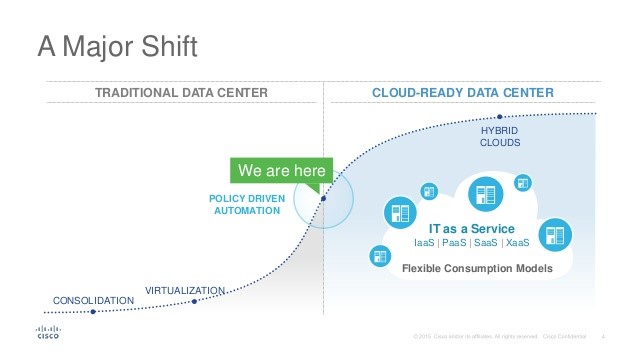

From an outsider perspective, these developments could be something that will happen in the foreseeable future. However, when you are a cloud provider or an ISV, these developments have already been taken into account. Most managing boards are fully aware of these rapid cloud application landscape shifts, both technological and cultural, occurring for the past two years, where the center of gravity has moved from traditional IaaS to PaaS in a Hybrid Cloud. This is an elastic and consistent cloud application first model, as opposed to building the complex infrastructure first and deploying the application second.

It is all about the modern, easy scalable and deployable infrastructure-independent cloud application, deployed in the Hybrid Cloud consisting of an on-premise environment connected to a public and private/hosted cloud.

Companies have to adjust their former IT strategy in a timely manner to survive in this new imminent cloud era, or face the consequences in the long run, risking being overtaken by bigger fish in the pond already adopting the new way of doing cost-effective business in the changing cloud and application landscape of today.

Shifts

The technology shift is primarily caused by new container and virtualization technology, changing all other technology on their path to success. They are accompanied by a high degree of exceptional automation done by cloud providers, making the whole package available as a service to customers, offering them a rich and simplified deployment experience that has been adopted by their developers; this experience then ultimately changes business requirements within companies, causing the cultural shift. Customers now want to scale and deliver an application quickly and efficiently, following the latest deployment technologies and standards, in the process making better use of compute, network, storage, and application resources. By combining this deployment experience with agile processes following the latest DevOps practices, they get shorter development cycles and rapid deployments, continuously updating and adding application functionality along the way, and thus eventually controlling and reducing costs, staying ahead in the IT space, and differentiating themselves from the competition.

Competition

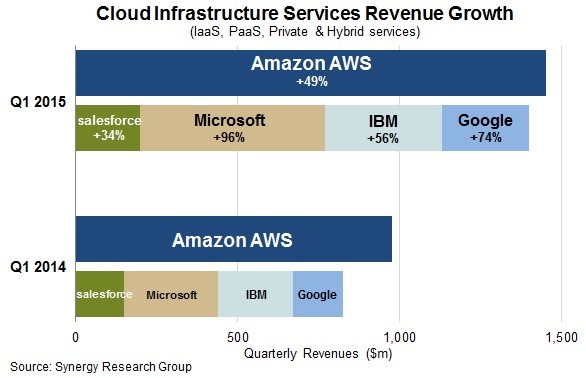

There is much competition between public cloud giants offering the newest features and technology with their services. They make it easier for customers to host applications in the cloud without having them to manually deploy any infrastructure, offering them automated delivery models and new ‘PaaS’ services, which are supported by highly automated resource managers provisioning and orchestrating all resources needed for a particular application.

Competition between cloud providers pushes cloud technology innovations further along; service maturity is reached in months instead of years. Most features offered in the public cloud can’t be made available on-premise or in other clouds due to tight integration with related public cloud services and their complex hyper-scale setup. These features compete directly with IT solutions on-premise or in other smaller clouds, grabbing a large share of revenue from IT companies and smaller cloud providers. Eventually, over time, this results in mass consolidation of a large portion of IT cloud solutions to well established and adopted cloud services in the hosted or public cloud.

Competition is not only coming from giants like Microsoft, Amazon, and Google, but also from hardware vendors caught up by network, storage, and hardware virtualization, who ultimately change their course and also head into the software-defined everything cloud, grabbing a piece of the pie by offering their virtual software and hardware services with a software-defined data center (SDDC) in the private or hosted cloud. They are now more aggressively competing against established public cloud providers, who are partly responsible for their change in strategy in the first place.

However, public cloud providers, and even former hardware vendors (HP/Dell/EMC etc.), also have to work together by defining common standards for new cloud services, improving application experience and interoperability between clouds. One example is the ‘Open Container Project’ (containers), where industry leaders are setting new standards for how containerized applications should function and be provisioned and maintained. By competing against each other, but also working together, large cloud companies propel the cloud and application landscape into a whole new cloud era.

Fast pace, keep up

So we can see the advantages of hosting an application in the cloud without bothering about the underlying infrastructure. Of course, other businesses and, in particular, software vendors (ISV’s) see them too. They benefit the most by turning their former monolithic client-server applications otherwise deployed on-premise into cloud-native ones, offering the customer a flexible and pay-per-use application in a SaaS cloud. ISV’s have to compete with other vendors also adopting the new cloud application deployment strategy, thus driving up the pace even further. This, in turn, wakes up smaller companies with in-house developed applications, or other smaller ISV’s unaware of the changing cloud landscape, who think they are relatively safe until they or their customers are caught up by competitors already adopting the cost-effective, resilient, scalable, elastic, and consistent cloud.

In the coming years, we are going to see that customers care less about the IT operations behind the application or service they use. They want it instantly available at all times as SaaS or PaaS without any performance degradations. If cloud businesses do not adapt early enough and offer this experience, their customers will surely look elsewhere. The days of committing to a particular IT company or (hosted) cloud provider are over. Application and infrastructure landscapes are changing rapidly between on-premise, private, hosted, and public clouds, at a much higher pace than we have ever witnessed before, resulting in ever-changing customer needs. These needs have to be closely monitored in these turbulent evolutionary cloud times!

All aboard

Big public cloud providers sponsor this evolution in technology in a big way, investing an average of 30 billion dollars each year in a growing public cloud industry worth around 140 billion today, with an astounding 500 billion predicted by 2020.1 In 2016, 11% of IT budget otherwise spent on-premise is now spent towards cloud computing as a new delivery model.2 By 2017, 35% of new applications will be using a cloud-enabled continuous delivery model, streamlining the rollout of new features and business innovations.2 In 2015, Amazon made 7 billion dollars in cloud revenue against Microsoft Azure’s 5 billion.3 An analyst at ‘FBR Capital Markets’ predicts that Microsoft will break the 8 billion in cloud revenue in 2016, catching up with Amazon.3

Sources: 1. Bessemer Venture Partners state of the cloud report 2. IDC Futurescape predictions, 3. Infoworld 2016: The year we see the real cloud leaders emerge

Adopting these innovations in a timely manner and, more importantly, adopting the philosophy, mindset, and a new default way of doing cloud business, can be crucial for providers and businesses for surviving in this new cloud era. If you are not actively working on what your business can do with these developments, then your competitor is. With every big change, you have winners and losers. Winners are going to learn, adapt, and thrive. Losers are going to resist; they will continue to feel comfortable in their own (IaaS) cloud bubble until it is too late for them to change.

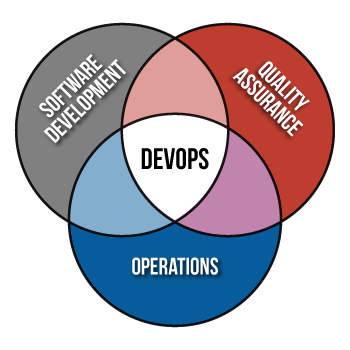

DevOps movement

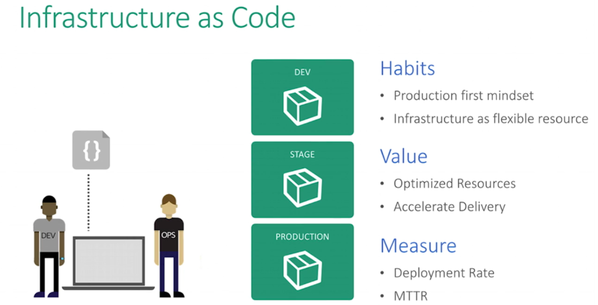

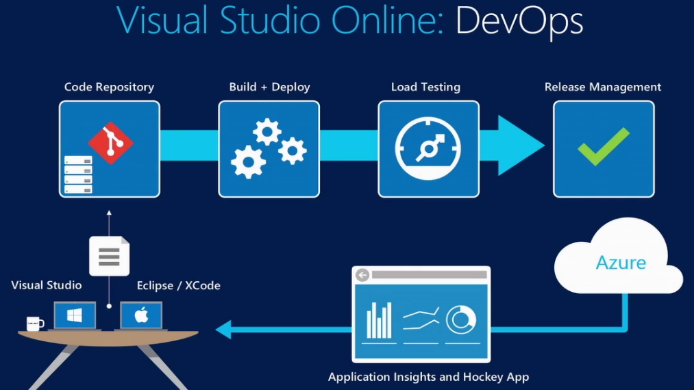

As mentioned, developer software is updated with all kinds of new application development functionality and cloud deployment features. These features are making the delivery and deployment relatively easy, without requiring any regular IT staff or manual infrastructure involvement. IaaS disappears into the background and becomes an invisible part of the cloud service. It is now deployed as ‘Infrastructure as Code’ (IaC), and is part of the overall application template.

These new development features also help developers scaling the application, based on company resource, availability, and redundancy needs. Deployment management is orchestrated from a single cockpit-view point in their cloud-compatible developer software of choice.

These new development features also help developers scaling the application, based on company resource, availability, and redundancy needs. Deployment management is orchestrated from a single cockpit-view point in their cloud-compatible developer software of choice.

From there, they implement new DevOps practices, utilizing the new cloud deployment approach, which requires one single cloud subscription, giving them direct access to all cloud service offerings; a playground with endless application hosting possibilities and combinations. They are able to deploy a web app in an ‘app service‘, a microservice compatible application in a service fabric, a containerized application in a container service, or a more traditional (monolithic) application in a full ‘Infrastructure as Code’ (IaC) environment. The application and all dependencies are deployed from their development cockpit, with a single template containing various resources and services; like, compute, network, storage, load balancers, security, VPN, routes, endpoints, or authentication.

Agile

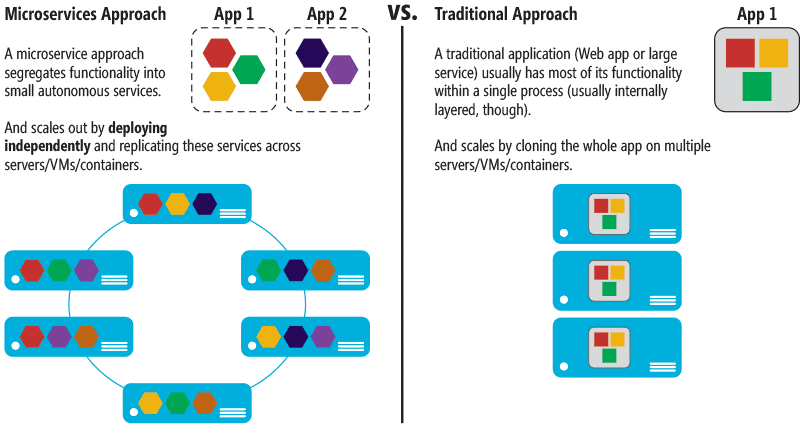

Utilizing true agile development processes, which aim for shorter flexible development cycles, and continuously add functionality along the way instead of the longer, dreadful development cycles, which are dependent on almost everything, allow development teams to work independently of each other on different application features (microservices). One team develops the ‘mobile device experience’, while another team does the ‘order processing’ part, without interfering with each other and the application as a whole. The magic combination of new automated deployment models, DevOps culture, and Agility enables companies to build out an entire infrastructure in the same time it would have taken them to only design the same infrastructure for a monolithic client-server application.

Jobs

The transition to a cost-effective, agile, elastic, and scalable deployable application requires IT staff to incorporate and learn a whole new skill set, bringing operations and development even closer together in the ever growing DevOps movement. Deploying infrastructure in the cloud is now more about code than directly touching the underlying supporting systems, requiring staff to work in a bottom-up approach instead of the traditional top-down. A top-down approch where the developer waits for the architect to finish designing, the IT Pro to deploy the infrastructure, and as last the developer trying to deploy the application in that non-flexible and bulky framework.

Infrastructure design, configuration, and manual deployments are going to be less significant with each passing year. Knowledge of cloud design, consultancy, and automated application deployment are taking their place. The gap between developer and IT Pro is growing smaller, requiring the developer to learn more about the deployment side of things and the IT Pro to learn more about cloud automation supporting the application deployment process.

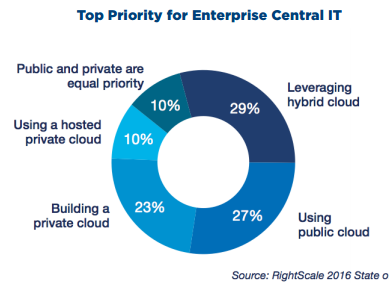

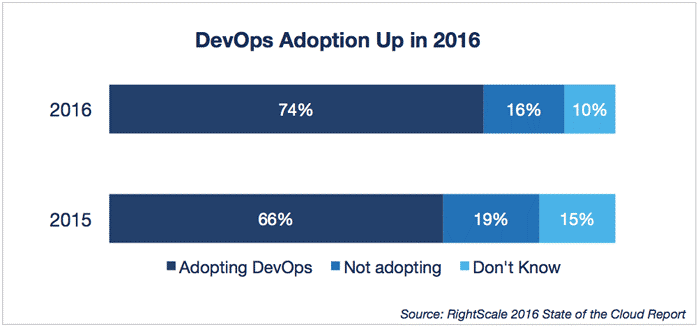

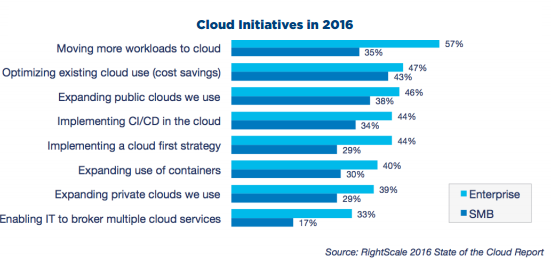

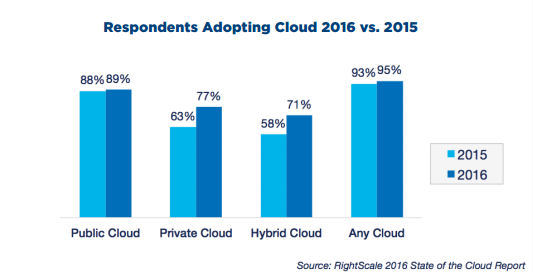

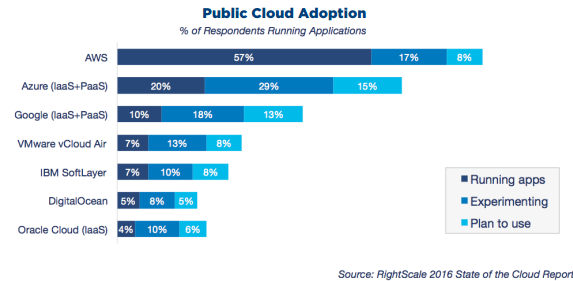

Cloud adoption

Cloud adoption still grows rapidly each year. Cloud adoption directly relates to cloud safety, cost, and maturity, wherein companies feel confident and comfortable enough to host their valuable services and data in the cloud. A research study conducted at the end of 2015 by North Bridge among 1000 survey respondents showed that security (45.2%), regulatory/compliance/policy (36%), privacy (28.7), vendor lock-in (25.8), and complexity (23.1) are factors responsible for holding cloud adoption back.

Cloud adoption still grows rapidly each year. Cloud adoption directly relates to cloud safety, cost, and maturity, wherein companies feel confident and comfortable enough to host their valuable services and data in the cloud. A research study conducted at the end of 2015 by North Bridge among 1000 survey respondents showed that security (45.2%), regulatory/compliance/policy (36%), privacy (28.7), vendor lock-in (25.8), and complexity (23.1) are factors responsible for holding cloud adoption back.

Due to cloud maturity, public cloud adoption inhibitors are becoming less significant each year. For instance, outages and security issues do not have a huge impact anymore. Outages fall well within the SLA of the specific service, and security issues on the platform are almost non-existent.  Outage and security are strongly tied to reputation, which is a big motivator for public cloud providers to invest heavily in these sensitive areas.

Outage and security are strongly tied to reputation, which is a big motivator for public cloud providers to invest heavily in these sensitive areas.

Privacy, regulatory, compliance, and policy issues are areas of concern for enterprise companies. Public cloud providers are catching up by conforming to almost all global and industry standards regarding security, privacy, and compliance issues. Most enterprises have already compared their internal policies with public cloud provider policies and sorted these legal issues out. They already know what their stance is in these matters and how to deal with them. Therefore, these policies, once holding cloud adoption back, are fading away and becoming less relevant. The remaining adoption inhibitors are complexity and vendor lock-in, which are more persistent and difficult to tackle. They often relate to existing (more complex) infrastructure, and require new technology, expertise, investments, and time to resolve; this is an issue a cloud consultancy company or a trusted local hosted cloud providers with advisory services, could assist with.

Smaller companies and start-ups care less about these adoption issues.

They begin with websites, mail, and office applications in the cloud, gradually expanding their cloud efforts to other more comprehensive services when they grow bigger. They are discovering and gradually adopting the new default way of doing cost effective (IT) cloud business. They are new-age, lean and mean businesses with flexible pay-per-use subscriptions, competing against the established rigid order.

Size matters

The bigger a company gets, the more infrastructure resources it needs. More resources result in more complex environments, making it more difficult to migrate to the cloud and delaying its adoption. Major enterprises, hosting complex environments, are very bulky, and, therefore, are reluctant to host their valuable data in a changing cloud landscape. They also have to account for the ‘return on investment’ (ROI) on existing infrastructure before investing in new technology. However, they do use the public cloud for new greenfield deployments. They are getting familiar with the cloud-first model, providing them better insights how existing complex infrastructure on-premise can integrate with it. In the long term, they will transition this existing viable infrastructure to the cloud when it is more mature and affordable for them.

Big technology leading-edge enterprises, whose IT is driving their core business, represent a more active force behind most heavy cloud investments and innovations. They need the scalable and elastic cloud to instantly deploy their resource-intensive infrastructure on, requiring that their distributed application models are being managed, scaled, and orchestrated effectively. Examples of these leading-edge cloud enterprises are Netflix, Amazon, Google, IBM, LinkedIn, Nike, PayPal, Spotify, and Twitter. Netflix is the first real modern-time microservices adopter and inspiratory, bringing their rigid code and lessons learned to the public. Presently, more enterprise companies have followed their microservices strategy, turning their existing tier layered infrastructure to a microservice orientated one.

Big technology leading-edge enterprises, whose IT is driving their core business, represent a more active force behind most heavy cloud investments and innovations. They need the scalable and elastic cloud to instantly deploy their resource-intensive infrastructure on, requiring that their distributed application models are being managed, scaled, and orchestrated effectively. Examples of these leading-edge cloud enterprises are Netflix, Amazon, Google, IBM, LinkedIn, Nike, PayPal, Spotify, and Twitter. Netflix is the first real modern-time microservices adopter and inspiratory, bringing their rigid code and lessons learned to the public. Presently, more enterprise companies have followed their microservices strategy, turning their existing tier layered infrastructure to a microservice orientated one.

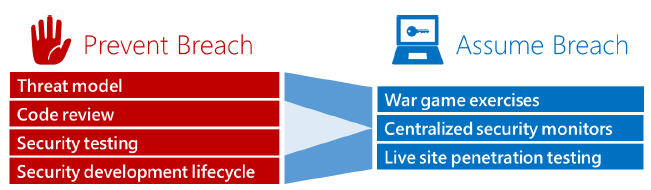

Security

When you talk about cloud adoption, then you also have to deal with the security aspect, which plays an increasingly bigger role every year and is something every smaller and bigger cloud provider throws money at nowadays. In the end, a breach damages the clouds provider’s reputation, and also damages cloud adoption in general. Most breaches are not due to the market being flooded with new PaaS or SaaS hosted applications; on the contrary, many breaches occur because companies are running outdated legacy monolithic applications in a mix with other monolithic applications, and are likely running on badly maintained and scarcely updated server and network infrastructure components caught up by new superseding technology. The same new technological innovations allow hackers to automate attacks and exploit newly discovered vulnerabilities. These attacks, with possible breaches and accompanying security threats, urge companies to update their environment. The recognition that the cloud may be more secure than their datacenter again drives cloud adoption even further.

Companies renew their security, thereby reassessing the application or solution to meet the latest security standards, very likely turning it into a new PaaS or SaaS based cloud application at a trusted (public) cloud provider. The cloud provider now manages the underlying infrastructure used by thousands of customers. Public cloud innovation, agility, standardization, and multitenancy happen at unthinkable hyper-scales and go along with constant security hardening and penetration testing by company red (attack) and blue (defend) teams. The solid battle-hardened cloud services are less likely to be a risk factor.

Companies renew their security, thereby reassessing the application or solution to meet the latest security standards, very likely turning it into a new PaaS or SaaS based cloud application at a trusted (public) cloud provider. The cloud provider now manages the underlying infrastructure used by thousands of customers. Public cloud innovation, agility, standardization, and multitenancy happen at unthinkable hyper-scales and go along with constant security hardening and penetration testing by company red (attack) and blue (defend) teams. The solid battle-hardened cloud services are less likely to be a risk factor.

Therefore, a breach at public cloud giants like Azure, Amazon, and Google is less likely to occur, compared with environments hosted on-premise or in smaller clouds. Company focus can then be shifted to the security of the application, because many hacking attempts and breaches occur at the application level, caused by unpatched or unknown vulnerabilities in the code.

Security thus becomes a key enabler for cloud adoption.

Formula

So the cloud adoption formula can be derived from the business size, infrastructure complexity (lock-in), and the perspective ROI, plus company data policies (regulation/privacy), against the potential data/security risk of hosting the application in the public cloud, along with what competitors are doing in their industry. Put all that in a business case for a product, and discover what is achievable towards greater cloud adoption.

Game changers

Technological innovations are driving the shift towards PaaS in a Hybrid cloud. Let’s highlight the most important ones and fit them together into the PaaS puzzle of today. Content in this section is seen from an Azure perspective; after all, this blog is about the need for Azure Stack. However, it does relate to recent cloud developments in general and overlaps with public cloud offerings from competitors like AWS and Google.

Microservices

Microservices have existed for a very long time but have never gained the kind of momentum we are experiencing today. They are loosely coupled services running independently of each other, as illustrated on the right. However, they function as a whole, each providing functionality to the application they belong to. Each microservice plays its role in the bigger picture. Development teams can work independently on different functionality (microservice) for the same application, without updating the application itself. They have their own development cycles, allowing them to work quickly and efficiently.

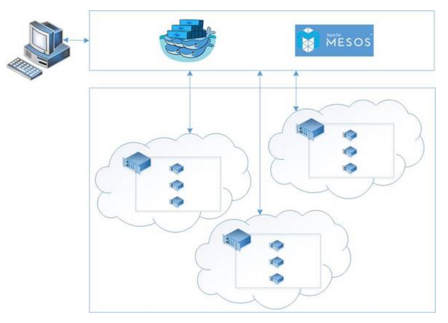

Microservices are built in clusters. You give a microservices orchestrator a cluster of resources and then you deploy the microservice compatible application to it, and it figures out how to place them. It takes care of the health of those applications and it takes care of scaling.

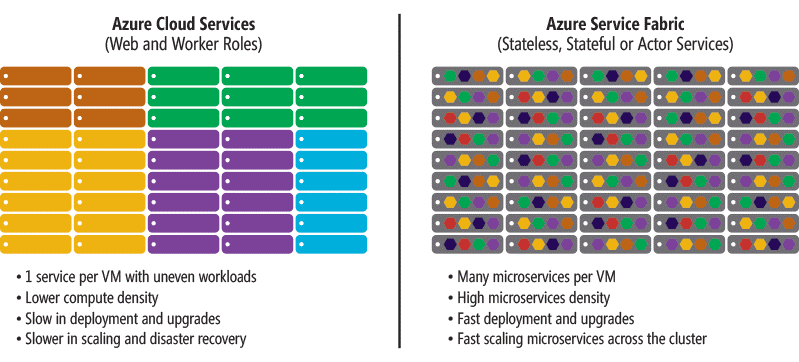

You can manage clusters and resource complexity yourself with an orchestrator, and be more flexible and cloud vendor independent. Alternatively, you can use an out-of-the-box PaaS solution, powered by a ‘service fabric’ and by resource managers doing all of the complex orchestration, management, and provisioning for you, like Azure did with their ‘service fabric‘ service.

Hyper-scale service fabric hosted microservice clusters are the foundation for most Azure services. The quote below from the Azure’s service fabric documentation illustrates very well how they are doing this today.

“Just as an order-of-magnitude increase in density is made possible by moving from VMs to containers, a similar order of magnitude in density becomes possible by moving from containers to microservices. For example, a single Azure SQL Database cluster, which is built on Service Fabric, comprises hundreds of machines running tens of thousands of containers hosting a total of hundreds of thousands of databases. (Each database is a Service Fabric stateful microservice.)”

Illustrated on the right, we can see Azure’s older service model compared to their new one using microservices in a service fabric. The service fabric handles all orchestration and automation with the help of resource managers managing the stateful and stateless microservices clusters along with all supported resources.

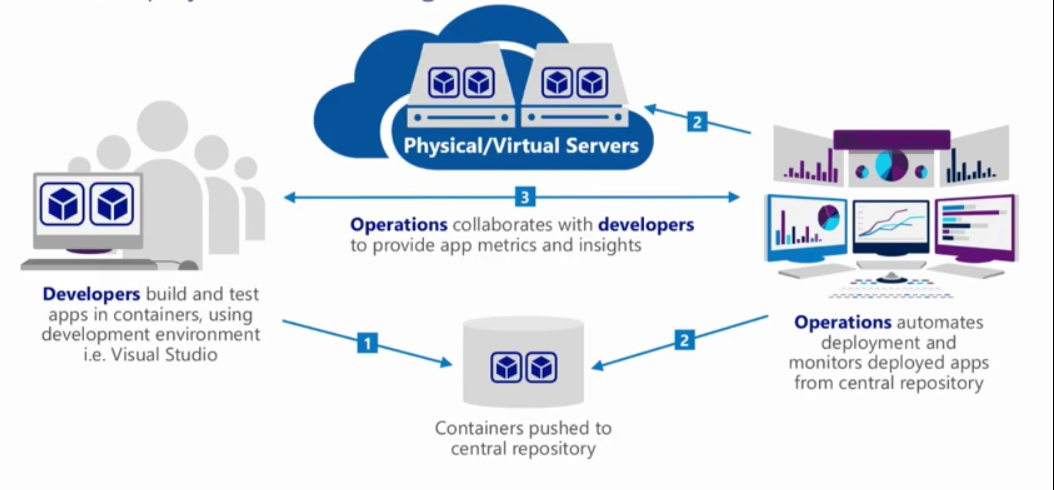

Containers

Containers are the foundation for the microservices revolution. Container technology is fundamentally an operating system virtualization; it is the ability to isolate a piece of code so that it cannot interfere with other code on the same system. You can scale up instantly and scale down. You can dev test very quickly, and so, once again, developers using new technology in developer software are driving this within companies. Forcing companies to change their IT strategy from a monolithic deployed application infrastructure to a microservices orientated one using containers. New microservices offerings supported by containers will revolutionize the IT and cloud application landscape.

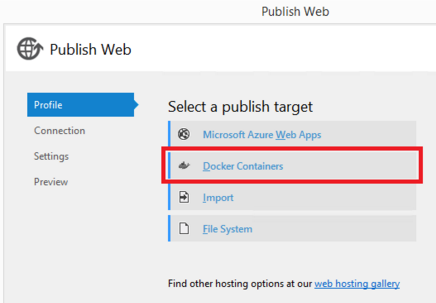

Until recently, only web/mobile or custom cloud provider applications could be hosted in Azure’s PaaS services. However, a lot has changed with the introduction of container virtualization, enabling a new wave of microservices at unimaginable scales, making it easier to support a whole range of new cloud applications from different environments. Container standardization is largely made possible by Docker. Dockers offers consistent deployment technology with a unified packaging format, making it very easy to deploy containers to different types of environments.

Container Resource Clusters

Containers allow PaaS service providers to create a cluster of containers. Each container cluster offers a resource needed for a PaaS compatible application to function, like web, database, storage, or authentication functionality. Combining these clustered application resources with clever automation provided by the PaaS service allows non-microservice orientated applications to be hosted as a PaaS application, an application otherwise dependent on a traditional infrastructure rollout.

Container Service

Microservice compatible applications, which are, most of the time, container compatible applications, do not need that kind of service management and are handled differently compared to a regular PaaS application. They require orchestration engines and tooling to be managed effectively.

There is much development in this space, with a lot of automation done by public cloud providers, making the experience of managing and deploying large distributed clusters of microservice containers more developer and IT admin friendly, but also more interoperable, making it easier for customers to host the same container application elsewhere.

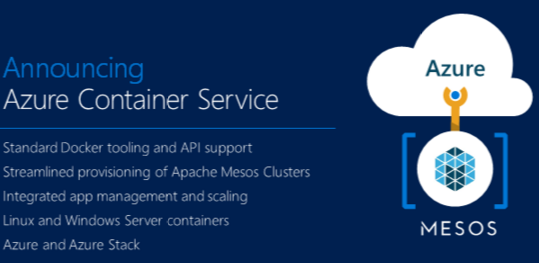

A fine container service example nowadays is the Azure Container service, based on Apache Mesos and technology from Docker. Yes, it’s a Unix solution.

However, you might know they already made Windows and Hyper Containers available on Windows Server 2016.

That makes, at the moment of writing this (march 2016), a matter of months until they announce Windows and Hyper-V containers availability in Azure Container Service, making it available for the huge Microsoft developer base, and truly unleashing a revolution in container space. This is further substantiated in a blog post made by Azure program manager, ‘Ross Gardler’, with the following statement:

“Microsoft has committed to providing Windows Server Containers using Docker and Apache Mesos is being ported to Windows. This work will allow us to add Windows Server Container support to Azure Container Service in the future”

Drumroll! If you looked closely at the ‘Azure Container Service’ slide above (presented at AzureCon by Scott Guthrie), you will have seen two significant words: AZURE STACK! So it seems that they also intend on bringing the Azure Container Service as an additional PaaS service to your data center! Without mentioning it in any Azure Stack blog post or roadmap.

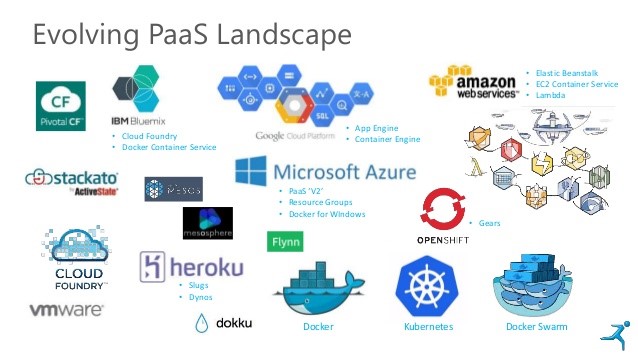

PaaS

Platform as a Service (PaaS) has also existed for quite a long time and is provider dependent, each provider offering their solution based on their own software. PaaS offers you an application deployment experience without the need to worry about the underlying infrastructure. Cloud providers arrange that for you. PaaS services are now in the process of being reinvented with microservice and containers. New PaaS services and features will be made available over time with microservices, containers, and highly automated processes as their foundation, making it easier for companies to host existing and new applications in the cloud without bothering about infrastructure. Infrastructure otherwise deployed for an application, such as IaaS, is now deployed as an invisible part of the PaaS service. IaaS spendings are shifting towards PaaS as the new way of delivering an application in the cloud.

A PaaS service allows you to host your web/mobile, custom app, container, or even microservice compatible application, instantly providing compute, network, security, and redundancy at hyper-scales. On top of that, PaaS services can also provide stateful resources to your application, like databases, authentication, application logic, device access/compatibility, and storage, thus taking care of resource orchestration, scaling, redundancy, and monitoring.

ISV’s, currently operating in their own private cloud and providing SaaS to their customers, can also leverage PaaS functionality by re-envisioning their SaaS service and placing it on top of PaaS, thereby gaining the same advantages concerning scalability and automation of application and infrastructure resources. Everything is taken care of by the PaaS service, which manages and orchestrates resources on the ‘invisible’ infrastructure in the background.

Microsoft Azure

Azure services offering application based PaaS are the new ‘Container Service‘, the new ‘Service Fabric‘, and revamped ‘App Service‘. The ‘Container Service’ offers the customer to run container clusters with a high level of manageability and interoperability. It is more complex and requires more intervention, but easily allows customers to run the same containerized application elsewhere. The ‘Service Fabric’ is a microservice based solution, which allows stateless and stateful applications to run as a custom microservice orientated application. Shared resources, monitoring, usage, and scaling are handled by the service. Applications supported are custom ‘Service Fabric’ tailored microservice compatible applications. They can be created, deployed, and locally tested in a cluster with ‘Visual Studio’. The ‘App Service’ allows customers to deploy native PaaS applications like a web (websites), mobile, api, logic, or custom app. New functionality and support for new applications are added on a monthly basis.

Landscape

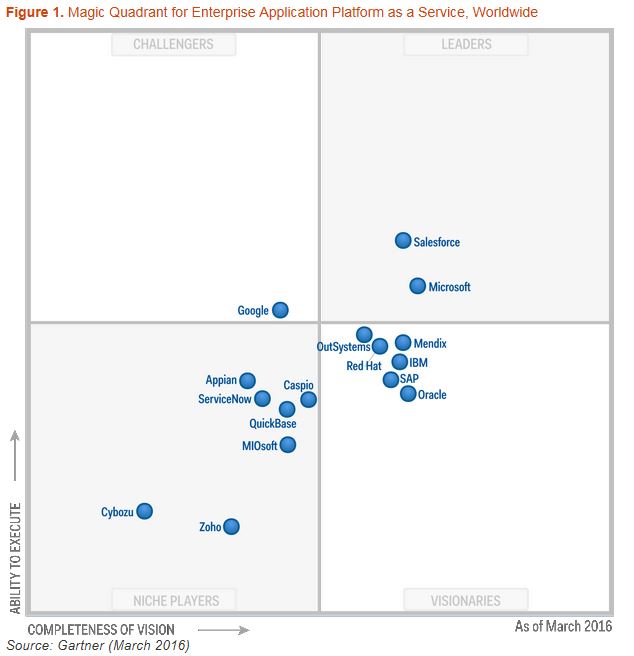

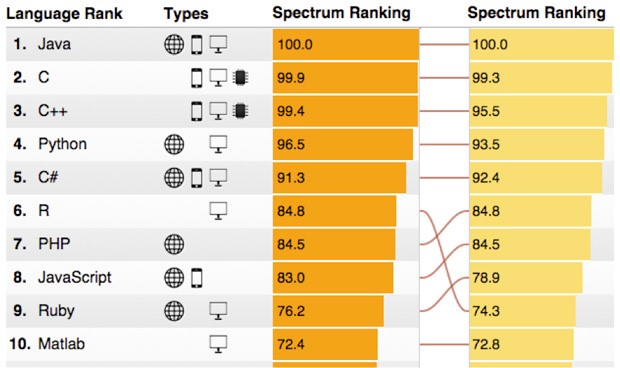

If you look at the left Gartner Magic Quadrant 2015 picture above, you’ll see a public cloud PaaS landscape dominated by SalesForce with Microsoft on its tale. Things changed as of March 2016 as seen in the right Magic Quadrant picture. Microsoft took over and is now the new application PaaS leader! It clearly illustrates the rapid development, power and adoption of Azure’s PaaS services. This adoption is backed by a large, loyal (Windows) developer base, using ‘Visual Studio’ with C, C++, and C#, as seen on the right. This picture illustrates the top 10 programming languages in 2015.

Azure adoption

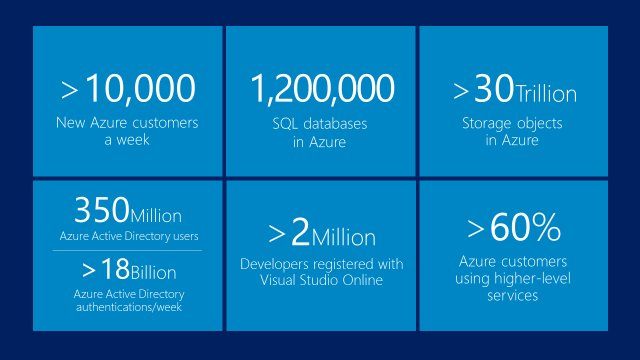

The slide on the right shows Azure cloud consumption by customers. With 2 million developers using Visual Studio with Visual Studio Online (Team Services) collaborating with their team on application development in Azure, developer adoption and PaaS market share foundations are rock solid.  New containers and microservices offerings in public, as well as in hosted clouds, can count on large scale adoption. It only requires developers to update their software and update application compatibility with the new PaaS service, and, last but not least, to deploy the application through their development software to the hosted or public cloud. No infrastructure to worry about and no costly manual tasks required.

New containers and microservices offerings in public, as well as in hosted clouds, can count on large scale adoption. It only requires developers to update their software and update application compatibility with the new PaaS service, and, last but not least, to deploy the application through their development software to the hosted or public cloud. No infrastructure to worry about and no costly manual tasks required.

Pay-per-use

So you can imagine how easy and efficient deploying a PaaS application is, especially if you do not have to worry about the underlying components. It is not only easily deployable and managed, but it also invites you to try it out without making any investment. Most cloud offers are solely based on a resource consumption model, which is ideal for a first test-drive in a public cloud of one of the providers above. Capital costs transform into flexible variable costs. When that test-drive is over it is a fairly easy to deploy that same application with all its resources in a production environment; the blueprint is already there. Just modify a couple of variables and scale accordingly!

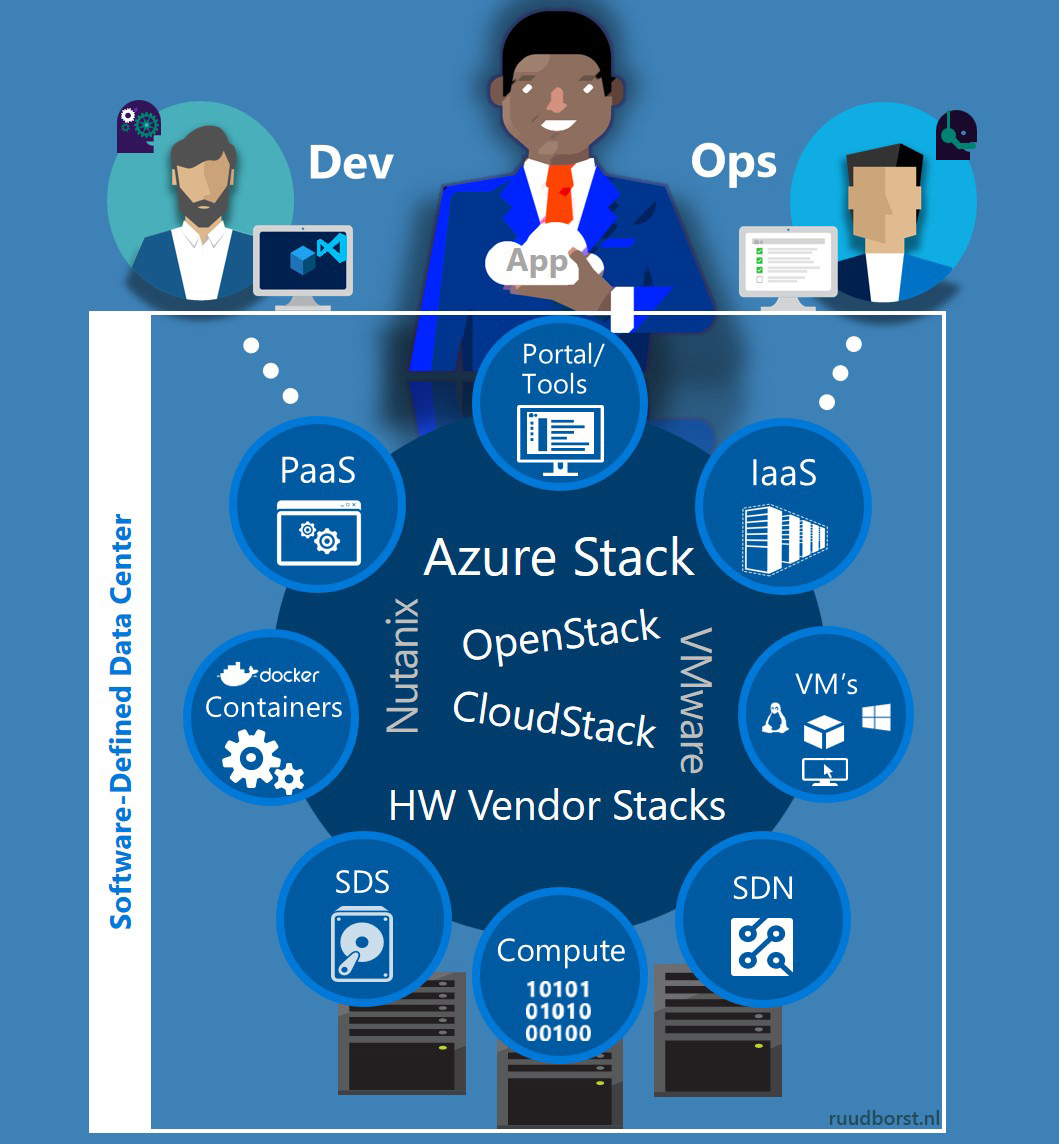

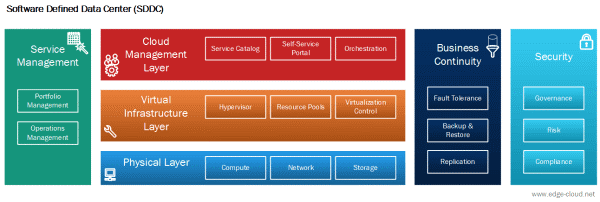

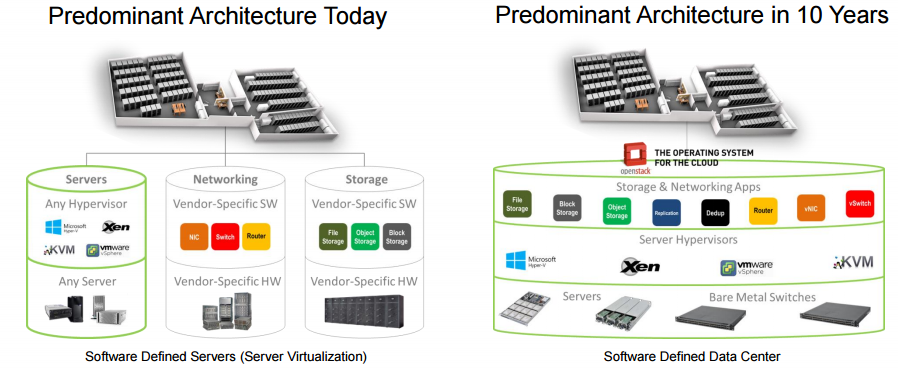

Software defined datacenter (SDDC)

We have not yet talked about software-defined networking, storage, and compute innovations, which are the driving force behind rapid cloud developments in private and hosted clouds. That’s because we were approaching all recent events from a public cloud service provider perspective, where we did not have to deploy any physical hardware. Deployment, provisioning, configuration, and operation of the entire physical infrastructure is abstracted from hardware, and implemented through software as a service. Everything is taken care of by the public cloud provider’s own fabric layer, offering a full software stack with virtual services on top of physical hardware. Provisioning of infrastructure starts as a provision request via the API’s or through a self-service portal, where virtual resources such as storage, network, and computing infrastructure will be delivered as the foundation of the new virtual service.

However, when hosted on-premise, in a private cloud or in the hosted cloud with a cloud service provider, you have to rely on your own or on the provider’s provisioning system to deploy or change network, storage, or compute. This often results in significant delays and a non-standardized way of delivering resources. Lacking an interoperable way of provisioning or exporting configuration and resources elsewhere, to another cloud or, even better, to a hybrid cloud, this complexity can result in a cloud vendor lock-in, making the transition to a multi or hybrid cloud a painful and costly process.

The dependency on cloud service providers using custom automated systems, or even manual operations, is going to change in the years ahead. Knowledge gained by delivering services and automation in a virtual software-defined way, from hyper-scale public, open-source, or hardware vendor clouds, is brought to customers. It is brought as a complete SDDC solution, with service provider functionality to your data center, where all IT infrastructure like compute, storage and networking is virtualized and instantly delivered as a service. Deployment, provisioning, monitoring, and management of the data center resources are carried out by the SDDC’s own automated software processes, offering consistent and interoperable deployment models, further closing the big innovation gap between public, hosted, and private clouds.

We all know compute virtualization carried out by hypervisors, compute goes along with storage and network virtualization and are less known. However, last two years they have taken a huge development leap by providing enterprise grade features, features normally offered by expensive hardware. Storage and network are abstracted from hardware and defined as code creating virtual resources on top of standard of-the

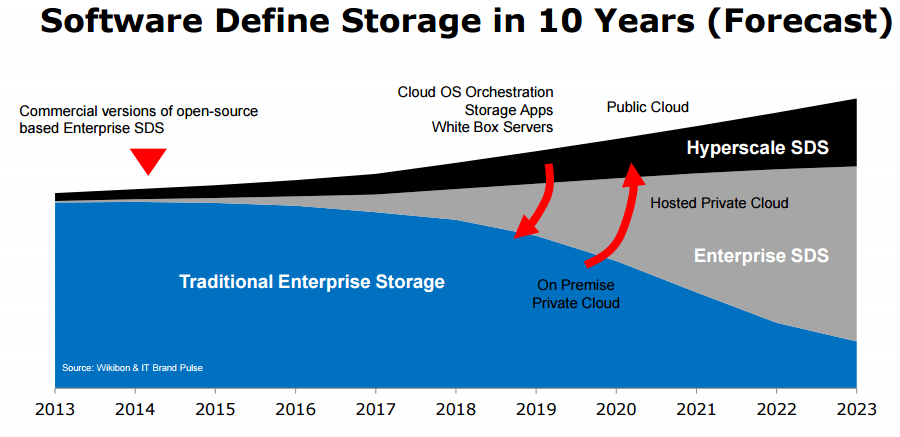

Software Defined Storage

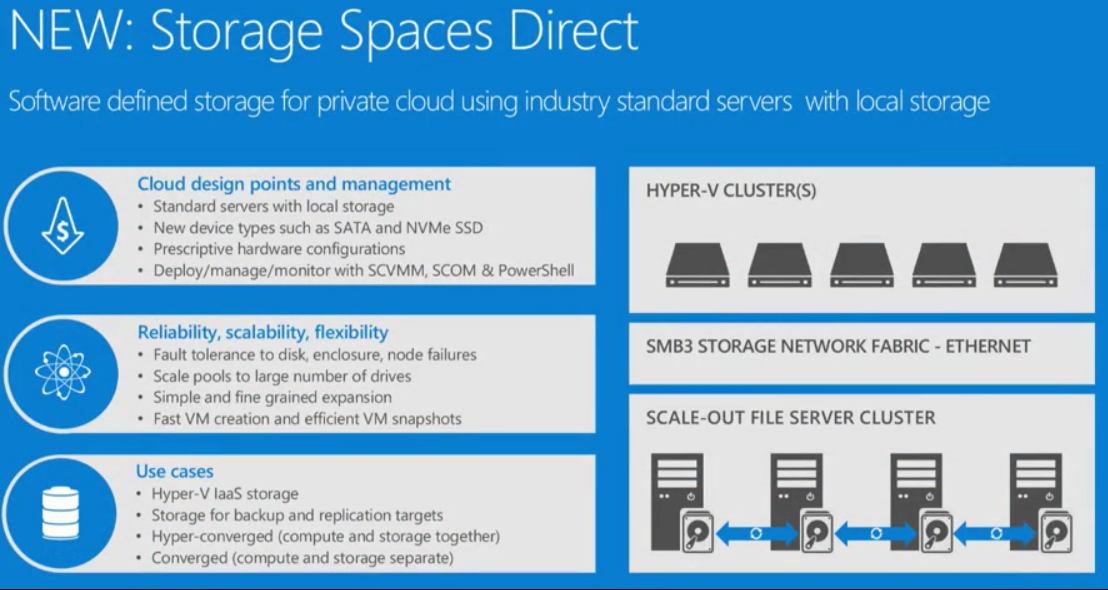

Software-defined storage (SDS) is, in essence, virtual storage. You can compare it with a raid array, where you define a logical volume with a raid type. With SDS, it’s not striped across local disks in the raid controller but across servers instead, standardized from the shelf servers with SDS installed as software taking over the role of a raid controller. SDS handles all storage replication and management information between a cluster of servers. Server local or even shared disks are used in stretched pools with disks from other member servers. Virtual disks are created and replicated to all nodes from the combined storage in these pools.

Storage functionality is abstracted from the physical storage device (an SAN for instance) and placed as software on commodity servers. You have the choice to use any disk compatible with your server and benefit from tiered storage. Use cheap and slow SATA disks for cold data and fast NVMe or SSD disks for hot data. SDS also offers enterprise features normally available with traditional SAN hardware. With software-defining storage, you get better redundancy, easy deployments, and frequent updates. Patching can now be easily done without downtime or significant performance loss, and all due to the fact that these nodes replicate to each other and form a highly redundant cluster, compared to a (single) dedicated shared storage unit.

Storage functionality is abstracted from the physical storage device (an SAN for instance) and placed as software on commodity servers. You have the choice to use any disk compatible with your server and benefit from tiered storage. Use cheap and slow SATA disks for cold data and fast NVMe or SSD disks for hot data. SDS also offers enterprise features normally available with traditional SAN hardware. With software-defining storage, you get better redundancy, easy deployments, and frequent updates. Patching can now be easily done without downtime or significant performance loss, and all due to the fact that these nodes replicate to each other and form a highly redundant cluster, compared to a (single) dedicated shared storage unit.

Microsoft

Microsoft Azure’s battle-hardened software-defined virtual network and storage are brought to the datacenter via their server software. Microsoft will release ‘storage spaces direct’ (S2D) in the third quarter of 2016 with its new server family. Microsoft’s software defined storage’ (SDS) solution allows you to use local or attached non-shared storage and offer it in a cluster as redundant virtual disks. VMware’s Virtual SAN or EMC’s ScaleIO are similar and already well-known released SDS solutions. They are leaving the traditional expensive and complex SAN far behind as a legacy redundant storage solution from the past. As opposed to its competitors, Microsoft’s ‘storage spaces direct’ (S2D) ships as part of ‘Server 2016’, making it available for a ridiculously broad audience, with functionality not only reserved for large enterprises but also for smaller ones. Small and medium-sized enterprises (SME), in particular, can benefit the most by using their own local storage to create a highly available storage solution, without investing tens of thousands of dollars in a traditional SAN. Coming from the battle-hardened Azure cloud, backed by a large user-base (audience), and delivered with proven server software, Microsoft’s new ‘storage spaces direct’ (S2D) solution will reach its maturity, adoption, and trust soon after its release. As a result, it will become a strong competitor against traditional storage solutions and already established SDS solutions.

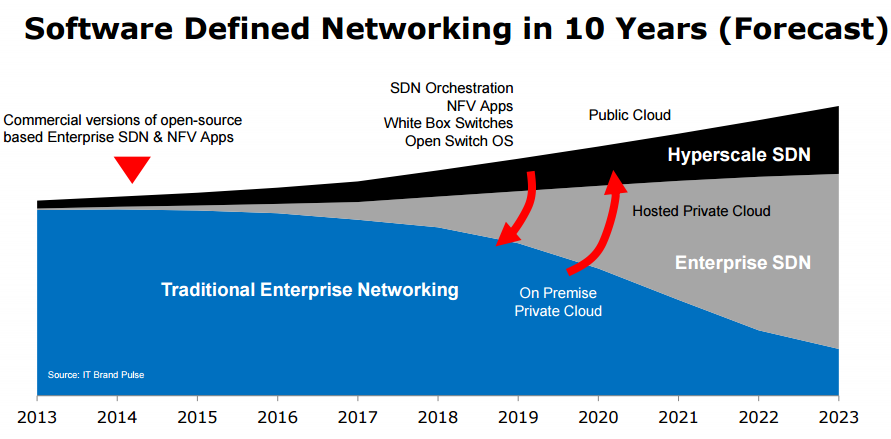

Software Defined Networking

Virtual Networking came along with the hypervisors and compute support, offering virtual networks for routing traffic between VM’s, and outside the host with NVGRE. It has taken another leap in the last years by also virtualizing enterprise network functionality, like firewalling load balancing, vpn gateways, and routing with VXLAN and BGP support, moving logic and code away from hardware based devices to pieces of virtualized network software, deployed on virtual or physical servers controlling the network. Network consuming now moves from a very complex and traditional model to this new software model. Apart from complexity and hardware savings there are more serious advantages using this new software-defined networking (SDN) model.

Automation

Automation

One is the automation; it reduces the time almost to zero when deploying network and security, complex network operations otherwise handled by networking operations staff configuring every device involved in the network stack. They have to plan, document, and deploy the operations and deliver it back to the customer, which can take a considerable amount of time and, needless to say, is very costly. The same process in a SDDC scenario using network virtualization can now be done directly by the customer, manually or through a pre-configured template.

HA and DR

High availability (HA) and disaster recovery (DR) can be (based on your setup) another advantage, where you deploy the same network topology and configuration in multiple clouds. Redeploying or exporting configuration for documentation purposes is standard functionality, all done by a single click or script and allows you to build out a second copy in another cloud providing HA or DR functionality. This kind of network automation and deployment clearly provides a whole range of new business benefits and opportunities otherwise constrainted to physical hardware rollout.

Security

Last, and certainly not least, is security. Network virtualization runs on any virtual machine; now you have the ability to apply ‘security services’ on every packet within your SDDC solution, as opposed to the traditional model, where security sits on the perimeter network and only sees traffic leaving the datacenter, which is only a very small part of all packets being sent and received in your topology. It also enables you to get a full network data center monitoring insights of your solution or application in one single view. Apply machine learning and analytics to these statistics and get even more insight into how your application behaves. By adjusting the application based on these insights directly in the SDDC or the product itself, generates even more business value and benefits.

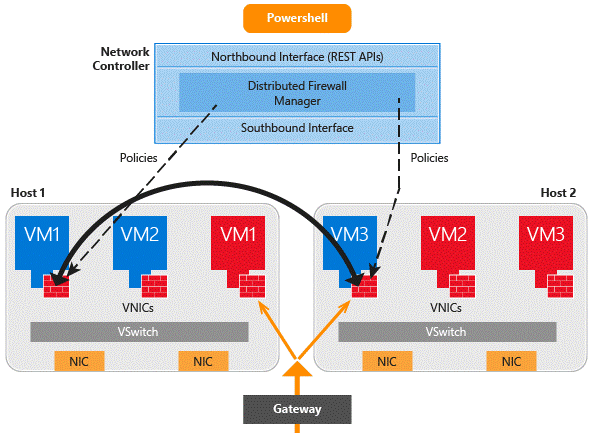

Microsoft

Also part of Microsoft’s ‘Server 2016’ is the new ‘network controller‘ role. In clustered setup it offers, just like VMware’s NSX, first-grade software-defined network (SDN) virtualization solutions, like a network load balancer, distributed firewall, VPN gateway, and much more. Microsoft assures that all code in the components is mature enough, a guarantee they can vouch for because all network components are battle hardened by hundreds of thousands of customers in Azure. The network controller code brought from Azure to your data center is an exact copy of the same software defined network components that these customers are using in Azure. The network controller can be managed with ‘virtual machine manager’ (VMM) or with Microsoft’s new SDDC, Azure Stack!

SDDC Landscape

Because of all the innovations and, in particular, the availability of containers and PaaS service offerings in hosted clouds, companies are now able to host all their cloud services against an affordable cost in their own data centers using a SDDC stack, seriously threatening public cloud revenue. In 2016, software-defined storage and networking markets have started to grow exponentially. Gaining on traditional enterprise solutions, like an SAN or load-balancing on hardware, SDDC, in general, is expected to grow from 21.7 billion in 2015 to 77.18 billion in five years. An SDDC hosted cloud market is currently occupied by Nutanix (watch this one), VMware, CloudStack, OpenStack, and OmniStack.

VMware

New Hybrid SDDC solutions focusing on the end-user and PaaS services give traditional established IaaS management products like vCenter from VMware a very tough time. “vSphere” primarily comforted the IT Pro and organization for years. A complete SDDC solution not only comforts the IT Pro but also the IT admin, end-user, and, last but certainly not least, the developers. That’s why VMware had to change their strategy and also jumped onto the PaaS bandwagon by adding PaaS solutions like Pivotal’s Cloud Foundry and Docker containers to their products. Shifting management and provisioning efforts away from their vSphere flagship towards SDDC PaaS solutions with vCloud Air (public) and vCloud Director (hosted). Customer adoption rate is quit low compared to well established Microsoft solutions that can count on huge on-premise Windows and Developer support. VMware is still struggling with this transition, breaking out of their traditional way of doing business focused on the IT Admin and that is not easy when VMware has become a rocking boat in the midst of the Dell and EMC merger.

Stacks

Not all SDDC solutions are easy to manage. Several companies, like ZeroStack, Atomia, and Cisco with its MetaPod, combined their own automated software solutions on top of these stacks to make it more complete, easy, and user-friendly. Not only management solutions are built on top of these software-defined data center stacks, but also services and, in particular, PaaS services from several open source vendors. Cloud Foundry is the biggest player and is embraced by Azure and many other vendors, like Cisco and VMware. It is an open source cloud computing PaaS service and SDDC independent. It uses the underlying IaaS to offer its containerized PaaS services on. It’s compatible with AWS, VMware, Cisco, and OpenStack. However, let’s not forget, it’s Unix based and open-sourced. Before losing any business to other hosted or hybrid cloud SDDC offerings, Microsoft had to step in fast with their own SDDC Stack, and what better competitive and innovative way to bring an exact copy of software-defined Azure to your data center!

Conclusions

We are in the middle of rapid cloud application landscape shifts, consisting of a Technological and Cultural shift, where the center of gravity is moving from traditional ‘Infrastructure as a Service’ (IaaS) towards ‘Platform as a Service’ (PaaS) in a Hybrid Cloud. It’s pushing the cloud industry into the second cloud era, an elastic and consistent cloud application first era, where infrastructure comes second.

Containers, software-defined virtual resources, and a high degree of service provider automation are enabling the Technical shift. Developer software is regularly updated with all kinds of new functionality, in particular compatibility, development, and, more importantly, cloud deployment features. Development software creates the bridge to the infrastructure independent PaaS services. From there developers re-architect their older monolithic tier dependent client-server applications to cloud-native ones. Developers using the newest technologies are driving the transition towards flexible and efficient cloud usage in companies, and, as a result, changing company application business requirements, causing the Cultural shift. New business requirements and technical innovations go along with DevOps practices and Agile processes aiming for shorter development cycles and rapid deployments, offering companies enormous flexibility and efficiency. By also using a variable cloud consumption model, they are now able to effectively control and reduce their costs, and stay ahead in their industry.

Businesses now expect the full cloud experience, where applications and dependent resources like compute, network, and storage deployment are deployed instantly; a true elastic and consisted cloud experience offered in the public, hosted, or hybrid cloud, where the hosted cloud is managed by a trusted local cloud provider offering virtual services hosted in a ‘Software-Defined Data Center’ (SDDC) solution, an SDDC brought from the public, open-source and hardware vendor clouds locally to cloud providers and enterprises. Pushing away traditional storage and network solutions running on physical dedicated hardware as an inflexible outdated solution from the past, the SDDC replaces these older technologies and now offers the IT organization a complete multi-tenant and automated out-of-the-box service provider data center solution.

Cloud adoption matures from puberty to adolescence. Security, often referred to as a cloud inhibitor, transforms to a key enabler towards cloud adoption. Customers recognize that the cloud may be more secure than their own data center. Other cloud adoption issues, regarding data placement (regulatory, privacy), vendor lock-in, and complexity, fade away when companies choose a consistent Hybrid multi-cloud strategy. A Hybrid cloud where they are able to ‘lift and shift’ their applications and workloads between their clouds based upon current applicable company policies and requirements.

Competition between public cloud providers, but also with hardware vendors in private/hosted clouds and on-premise, is causing an unprecedented growth in newly offered cloud services and technologies at a much higher pace than ever witnessed before, pushing the cloud industry even further and faster into a new hybrid application driven cloud era.

I hope I could shed some light on these interesting developments impacting the way of doing business in this new cloud era, how Azure Stack fits in and what it could mean for your business and for you as a professional. With this bright new light in mind, where do you see yourself in five years time?

Try to contemplate about this and head over to ‘Part 2‘ , explaining how ‘Azure Stack’, as a technical product, fits in this changing application driven cloud landscape.

Author

Twitter:@Ruud_Borst

LinkedIn | TechNet

References

Cloud reports

http://www.slideshare.net/North_Bridge/2015-future-of-cloud-computing-study/

http://www.rightscale.com/blog/cloud-industry-insights/cloud-computing-trends-2016-state-cloud-survey

https://azure.microsoft.com/en-us/blog/microsoft-azure-named-a-leader-in-gartner-s-enterprise-application-platform-as-a-service-magic-quadrant-for-the-third-consecutive-year/

Cloud landscape

https://azure.microsoft.com/en-us/blog/cloud-innovation-for-the-year-ahead-from-infrastructure-to-innovation/

http://searchvmware.techtarget.com/feature/Dell-and-EMC-deal-vSphere-6-among-biggest-VMware-takeaways-in-2015

http://www.slideshare.net/North_Bridge/2015-future-of-cloud-computing-study/

http://www.rightscale.com/blog/cloud-industry-insights/cloud-computing-trends-2016-state-cloud-survey

SDDC

https://gallery.technet.microsoft.com/Understand-Hyper-Converged-bae286dd

http://www.wooditwork.com/2016/01/13/zerostacks-full-stack-infrastructure-application/

http://www.slideshare.net/happiestminds/whitepaper-evolution-of-the-software-defined-data-center-happiest-minds

http://www.slideshare.net/Visiongain/software-defined-data-centre-sddc-market-report-20152020

http://www.slideshare.net/ITBrandPulse/new-networking-technology-survey-analysis

http://www.businesscloudnews.com/2016/02/16/cisco-to-resell-pivotals-cloud-foundry-service-alongside-metapod/http://www.cisco.com/c/en/us/products/cloud-systems-management/metapod/index.html

http://www.vmware.com/cloud-services/develop/vcloud-air-pivotal-cf

http://www.infoworld.com/article/3028173/analytics/ciscos-cto-charts-a-new-direction.html

http://www.businesscloudnews.com/2016/02/16/cisco-to-resell-pivotals-cloud-foundry-service-alongside-metapod/

http://www.cisco.com/c/en/us/products/collateral/switches/virtual-application-container-services-vacs/datasheet-c78-733208.html

http://www.networkworld.com/article/3032346/data-center/vmware-narrowing-sdn-gap-with-cisco.html

http://www.theregister.co.uk/2016/02/17/cisco_sdn_programming/

http://virtualgeek.typepad.com/virtual_geek/2015/09/scaleio-node-whats-the-scoop-and-whats-up.html

Microservices and containers

https://mva.microsoft.com/en-US/training-courses/exploring-microservices-in-docker-and-microsoft-azure-11796?l=FhWy8pmEB_004984382

https://azure.microsoft.com/en-us/blog/cloud-innovation-for-the-year-ahead-from-infrastructure-to-innovation/

https://mva.microsoft.com/en-US/training-courses/exploring-microservices-in-docker-and-microsoft-azure-11796?l=FhWy8pmEB_004984382

http://www.forbes.com/sites/janakirammsv/2016/02/18/containers-as-a-service-trend-picks-up-momentum-with-the-availability-of-azure-container-service/

https://visualstudiogallery.msdn.microsoft.com/0f5b2caa-ea00-41c8-b8a2-058c7da0b3e4 (Visual Studio 2015 Tools for Docker)

https://azure.microsoft.com/en-us/documentation/articles/service-fabric-create-your-first-application-in-visual-studio/

https://channel9.msdn.com/Shows/Zero-to-Continuous-Deployment-of-Dockerized-App-for-Dev-Test-in-Azure?wt.mc_id=dx_MVP5001612

Security